Table of Contents

Table of Contents

Web scraping is an effective tool for gathering data from websites. Among the many tools to be had, Selenium stands proud because of its versatility and performance. This guide will explore 5 important tips for successful Selenium web scraping. These tips will help you scrape records more effectively and avoid common pitfalls. Whether you’re new to web scraping or looking to improve your skills, this guide is for you.

What is Selenium?

Selenium is a tool that is open-source and is utilized for automating web browsers. It’s widely used for checking out web applications however it excels at web scraping. By simulating user actions like clicking, typing, and scrolling, Selenium can extract information from websites that might be hard to scrape with other tools.

Why Use Selenium for Web Scraping?

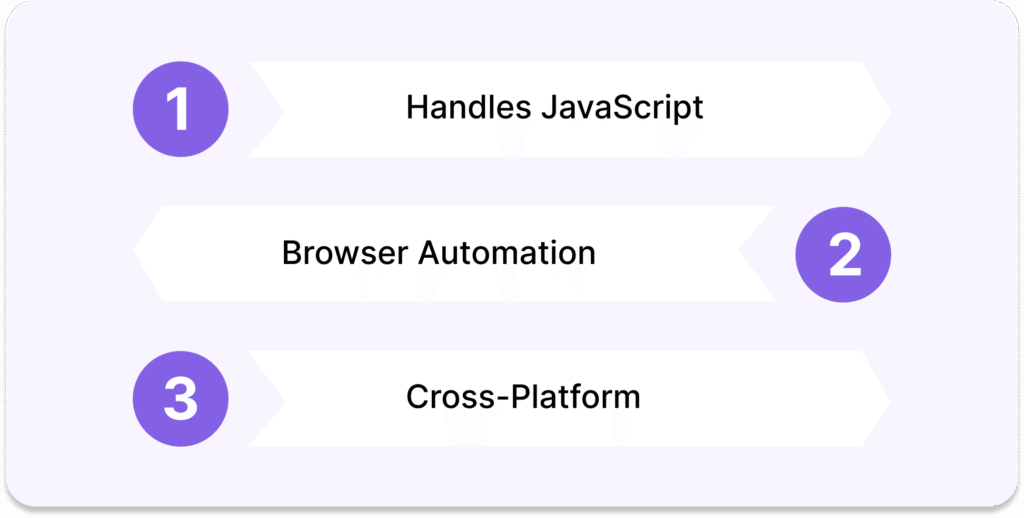

- Handles JavaScript: Many websites use JavaScript to load content. Selenium can interact with these elements, making it possible to scrape dynamic content.

- Browser Automation: Selenium can control a web browser, allowing you to navigate web pages, fill out forms, and click buttons automatically.

- Cross-Platform: Selenium supports multiple programming languages (like Python, Java, and C#) and works on various operating systems.

Understand the Basics of Selenium Web Scraping

Before diving into the complexities of Selenium Web Scraping, it is critical to grasp the fundamentals. Selenium is an effective tool that allows you to control web browsers through programs and automatically extract data from websites. It supports diverse programming languages like Python, Java, C#, and more. For most users, Python is the preferred language due to its simplicity and the availability of vast libraries.

Getting Started with Selenium

To begin with Web Scraping, you need to install the Selenium library and a web driver. The web driver acts as a bridge between your code and the browser. Here’s a quick example using Python:

Next, download the appropriate web driver for your browser, such as ChromeDriver for Google Chrome. Here’s a basic script to open a webpage and extract data:

Use Explicit Waits to Handle Dynamic Content

Web scraping with Selenium often includes managing dynamic content that loads asynchronously. This can be difficult if elements aren’t immediately available. Using explicit waits can help ensure that your script waits for the necessary elements to load earlier than trying to interact with them.

Implementing Explicit Waits

Explicit waits in Selenium are used to pause your script until a condition is met. This is particularly useful for pages that use JavaScript to load content dynamically. Here’s how you can implement explicit waits:

Using explicit waits ensures that your Selenium Web Scraping script is robust and can handle various loading times and dynamic content.

Navigate Complex Websites with Ease

Many websites today are complex and use various navigation techniques like dropdown menus, pop-ups, and AJAX calls. To effectively perform Selenium Web Scraping on such sites, you must know how to navigate these complexities.

Handling Dropdowns and Pop-Ups

Selenium provides methods to handle various web elements. For instance, to interact with dropdown menus, you can use the Select class:

For pop-ups, you can switch to the alert and handle it accordingly:

Mastering these navigation techniques will make your Selenium Web Scraping projects more efficient and effective.

Optimize Your Selenium Web Scraping for Performance

Performance optimization is crucial in Web Scraping, especially when dealing with large datasets or multiple pages. Here are some tips to enhance performance:

Reduce Browser Interactions

Every interaction with the browser adds to the execution time. Minimizing these interactions can speed up your script. For example, rather than navigating through pages to extract data, you could immediately fetch the page supply and use an HTML parser like BeautifulSoup to extract the information:

Headless Browsing

Running the browser in headless mode (without a graphical user interface) can also improve performance. Here’s how you can enable headless mode:

Optimizing your Selenium Web Scraping scripts ensures that they run faster and consume fewer resources.

Follow Ethical Guidelines and Best Practices

While Web Scraping is a powerful tool, it’s essential to follow ethical guidelines and best practices to avoid legal issues and ensure responsible use.

Respect Website Policies

Always check the website’s robots.Txt document and terms of service to understand their scraping policies. Some websites explicitly limit scraping and ignoring these rules can cause legal consequences.

Avoid Overloading Servers

Excessive scraping can overload a website’s server, leading to downtime or blocking of your IP address. Introduce pauses between requests to simulate natural browsing patterns.

Following these best practices ensures that your Selenium Web Scraping activities are ethical and respectful of website owners’ resources.

Exploring Advanced Features of Selenium

Selenium offers a range of advanced features that can enhance your web scraping capabilities. These include:

1. Handling Authentication and Cookies

Selenium can automate login processes and handle cookies effectively. This feature is crucial for scraping data from authenticated sections of websites.

2. Interacting with Dynamic Elements

Advanced techniques such as handling iframes, drag-and-drop actions, and interacting with canvas elements can be achieved using Selenium’s advanced methods.

Scaling Selenium Web Scraping Projects

Scaling up your Selenium projects involves managing larger datasets, multiple websites, or continuous scraping tasks. Consider these strategies:

1. Using Headless Browsers for Efficiency

Running Selenium in headless mode reduces resource consumption and speeds up scraping processes, making it ideal for large-scale operations.

2. Implementing Proxy Rotation and IP Management

To avoid IP bans and access restrictions, integrate proxy servers and IP rotation strategies into your Selenium scripts for seamless and uninterrupted scraping.

Conclusion

Selenium Web Scraping offers a powerful way to extract data from websites, but it requires a good understanding of the tool and adherence to best practices. By mastering the basics, using explicit waits, navigating complex sites, optimizing performance, and following ethical guidelines, you can make the most out of Selenium Web Scraping. Happy scraping!

FAQs

To handle dynamic content, use Selenium’s WebDriverWait and ExpectedConditions to pause your script until the desired elements are present. Scroll to the bottom of pages to load more content, and capture AJAX requests to parse JSON responses.

Prerequisites include basic programming knowledge, understanding of HTML and CSS, installation of Python and Selenium, installation of a web browser and WebDriver, and familiarity with XPath and CSS selectors.

➡️ Install Selenium and WebDriver

➡️ Set up your Python environment

➡️ Launch the browser

➡️ Navigate to the target website

➡️ Locate and extract data using selectors like class names, IDs, or XPath

Selenium provides several strategies for finding elements, including searching by tag name, using HTML classes or IDs, and employing CSS selectors or XPath expressions. Utilize the browser’s developer tools to quickly explore the page structure.