Table of Contents

Table of Contents

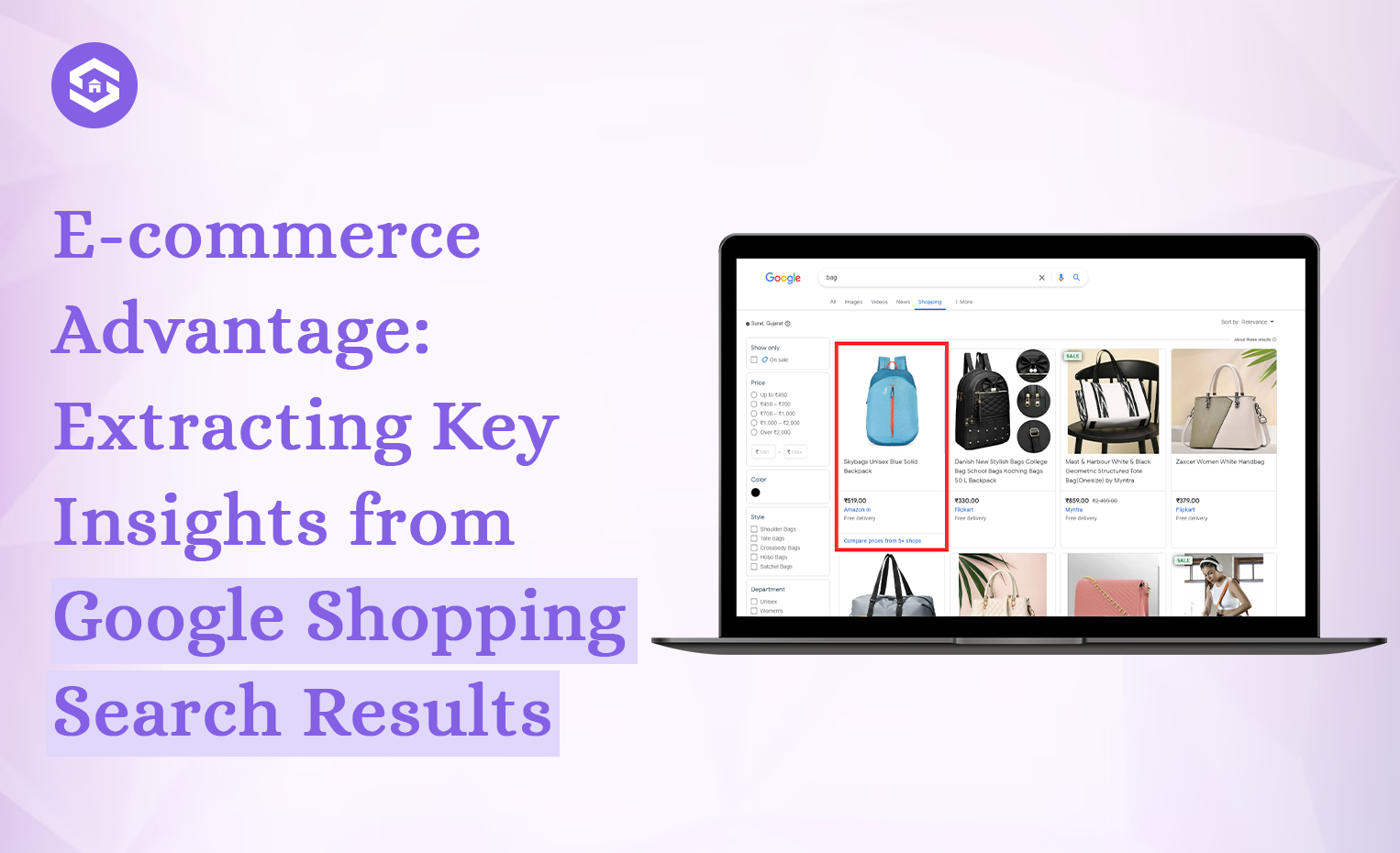

E-commerce is always changing, so you have to be on the lookout at all times. If you want to stay ahead of the competition, you need great goods and marketing strategies, as well as a deep understanding of how they work.

That’s where SERP scraping comes in—a powerful way to get useful information from search results for shopping.

With the help of SERPHouse, a top SERP API service, this guide goes into detail about how to scrape shopping results from Search Engine Results Pages (SERPs).

We will talk about the pros, cons, legalities, moral issues, and, most importantly, a step-by-step way to successfully scrape shopping data.

Why Scrape Shopping Search Results?

Imagine having a real-time window into what products rank for your target keywords, their pricing, and even snippets of customer reviews. This is the power of scraping shopping SERPs.

Here are some key benefits:

- Competitive Analysis: Uncover your competitors’ top-ranking products, pricing strategies, and the keywords they’re targeting. This allows you to refine your product offerings and pricing to stay competitive.

- Market Research: Gain valuable insights into market trends, identify popular products within your niche, and discover new customer demands. This empowers you to make informed decisions about product development and marketing campaigns.

- Price Tracking: Monitor price fluctuations of your own products and those of your competitors. This helps you adjust your pricing strategy dynamically to ensure optimal profitability.

- Identify New Opportunities: Discover new product categories or sub-niches within your broader market. This opens doors for product diversification and expansion into untapped markets.

Legality and Ethics of Scraping

Before diving into the how-to, addressing the legalities and ethical considerations surrounding scraping is crucial. Here’s what you need to keep in mind:

Respect Robots.txt:

Most websites have a robots.txt file that specifies how bots, including web scrapers, should interact with the site. Always respect the robots.txt guidelines to avoid violating terms of service.

Be a Responsible Scraper:

Don’t overload a website with excessive scraping requests. Implement a reasonable delay between requests to avoid overwhelming the server.

Focus on Public Data:

Only scrape publicly available data displayed on SERPs. Avoid scraping private or sensitive information.

Important Note: Laws and regulations around scraping can vary by region. It’s always best to consult a legal professional for specific guidance in your jurisdiction.

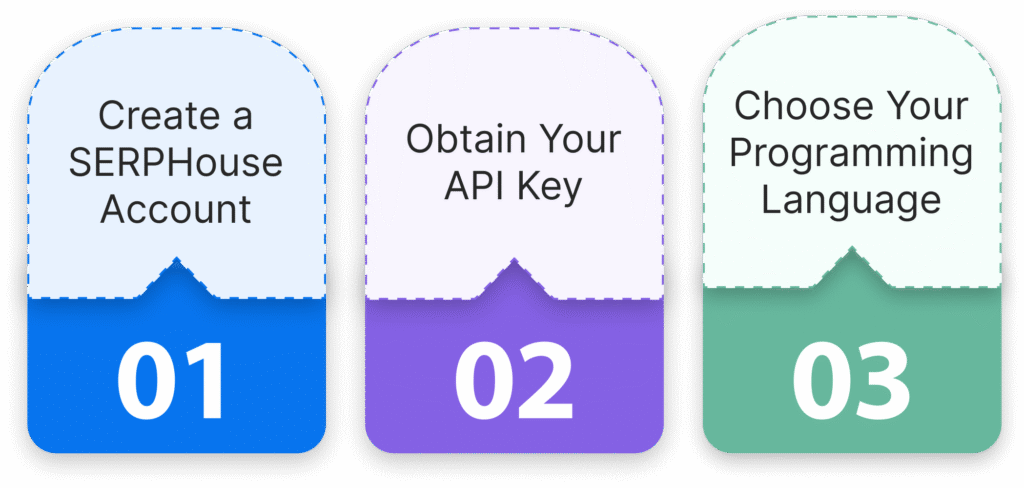

Get Equipped: Setting Up SERPHouse

Now, let’s get down to business! Here’s how to set up SERPHouse and prepare for scraping shopping results:

Create a Account: Head to and sign up for a free account. This will grant you access to a limited number of monthly API requests.

Obtain API Key: Once registered, navigate to your account dashboard and locate your API key. This unique key identifies your account and is required to make API requests.

Choose Your Language: It offers libraries and code examples for various programming languages like Python, Node.js, and PHP. Select the language you’re comfortable with when writing the scraping script.

Building Your Shopping SERP Scraping Script

Here’s a breakdown of the steps involved in crafting your SERP scraping script with SERPHouse:

- Import Necessary Libraries: Import the required libraries to make API requests and handle the JSON response data. The specific libraries will depend on your chosen programming language.

- Define the Search Query: Set the search term for which you want to scrape shopping results. This could be a broad term like “running shoes” or a more specific one like “women’s running shoes for flat feet”.

- Construct the API Request: Use the SERPHouse API library to build the API request. Specify the search query, desired SERP features (f=shopping), and other relevant parameters, such as location and language.

- Execute the API Request: Integrate your API key and make the request to the SERPHouse API using the defined parameters.

- Parse the JSON Response: The response from SERPHouse will be in JSON format. Utilise your chosen programming language’s tools to parse this data and extract the desired information.

Data Points to Extract:

- Product Title: The title of the product listing.

- Product URL: The web address of the product page.

- Price: The displayed price of the product.

- Merchant Name: The name of the retailer selling the product.

- Image URL: The URL of the product image.

- Review Snippets: Excerpts from customer reviews displayed on the SERP.

6. Store the Extracted Data: Choose a suitable method to store the extracted shopping data. Here are some options:

Create a CSV File: This is a common and easy-to-use format for storing scraped data in a tabular format. Each row can represent a product, with columns for title, URL, price, and other extracted information.

Export to a Database: For larger datasets and complex analysis, consider storing the data in a structured database management system like MySQL or PostgreSQL. This allows for efficient querying and manipulation of the data.

7. Automate the Process: If you plan on scraping shopping SERPs regularly, consider automating the script. You can achieve this by scheduling the script to run periodically using cron jobs (Linux/Unix) or Task Scheduler (Windows).

Here’s an example Python code snippet to illustrate the basic structure of a scraping script using SERPHouse’s Python library:

Remember: This is a simplified example. You’ll need to customize the script based on your specific needs and chosen programming language.

Analyzing Your Scraped Data

Once you store your shopping data, it’s time to unlock its potential. Here are some ways to analyze the scraped data:

- Identify Top-Ranking Products: Analyze which products consistently rank at the top of the SERPs for your target keywords. This can reveal what resonates with your audience and inform your product development strategy.

- Compare Pricing Strategies: Track competitor pricing across different products and categories. This allows you to optimize pricing to stay competitive and maximize profit margins.

- Monitor Market Trends: Analyze changes in product rankings and pricing over time. This can help you identify emerging trends within your market and adjust your business strategy accordingly.

By effectively scraping and analyzing shopping SERPs, you gain a significant edge in the ever-competitive e-commerce landscape.

SERPHouse is a user-friendly API that focuses on responsible scraping, empowering you to gather valuable market intelligence and propel your e-commerce business forward.

Remember: Always practice responsible scraping and respect website guidelines. Use the insights gained ethically and legally to improve your e-commerce strategy and, ultimately, deliver a better customer experience.