Table of Contents

Table of Contents

Web scraping and using APIs will become harder in 2026. Websites are smarter now. They block IPs quickly, show errors, or limit how much data you can collect. If you try to scrape without protection, your requests can be blocked again. That’s why using the best proxy servers is critical – they help you remain unnoticed, rotate IP addresses and ensure that your data extraction works quietly and continuously.

That’s why proxy servers are so important.

A proxy hides your real IP address and helps you collect data safely. It acts like a shield between you and the website. Many people now use residential proxies (which appear to be normal users) or rotating proxies (which automatically change IP addresses) to stay safe while web scraping or using APIs.

But here’s the problem: not all proxies work the same. Some are fast, some are slow. Some get blocked easily. Others cost too much. So, how do you know which one is right for your scraping or API tasks?

That’s what this blog is here to help with.

What You’ll Learn in This Blog:

- What proxy servers really do — in simple words

- The types of proxies and how they’re different

- How proxies help with API scraping and real-time data collection

- Common problems (like blocks or slow speeds) — and how the right proxy fixes them

- Smart ways to add proxies into your scraping or API setup

- Tips for large-scale projects that need strong and stable proxy support

- A list of the best proxy providers to choose from in 2026

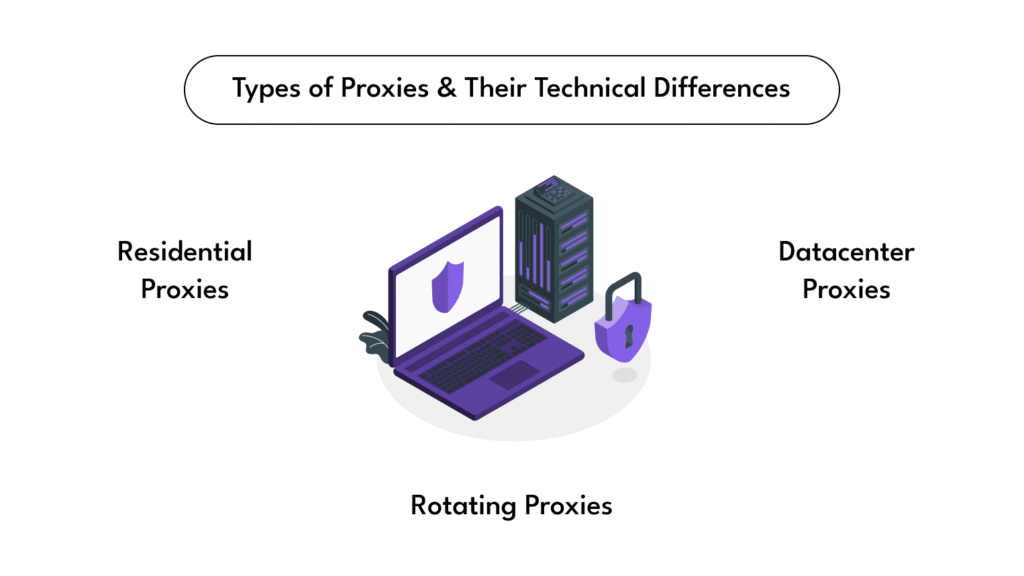

Types of Proxies & Their Technical Differences

When it comes to proxies, knowing your options can save you a ton of trouble. Here’s a quick, easy guide to the main types you’ll see in 2026 — all explained simply.

Residential Proxies

These proxies come from real people’s homes. Because they’re actual devices with real IPs, websites treat them like normal users. That makes residential proxies excellent for scraping sites that are strict about blocking bots or limiting access based on location. If you want to avoid bans and get reliable data from tough sites, this is your best bet.

Datacenter Proxies

Datacenter proxies are different — they come from big servers in data centers, not homes. They’re usually cheaper and faster, but websites can spot and block them more easily. These are great if you need speed and are working with APIs or sites that don’t have heavy restrictions.

Rotating Proxies

Rotating proxies aren’t a separate type but a feature. They automatically change your IP address after every request or at set intervals. This rotation helps you avoid blocks and ensures that your scraping or API calls run smoothly. You can choose between rotating residential or rotating data centre proxies, depending on your needs.

How Proxies Interact with APIs

When you are using APIs to collect data, proxies play a quiet but powerful role behind the scenes. Think of an API like a guardian – decides who gets in, how much data they can access and how often they can ask for more. Without proxies, your IP address is visible and in the centre, which can facilitate Gatekeeper blocking if you send many requests or come from a restricted location.

This is where proxies step in.

By routing your API requests through a proxy server, your real IP gets hidden. Instead, the API sees the proxy’s IP. This helps in a few major ways:

- Avoiding rate limits and bans: Many APIs limit how many requests one IP can make in a certain time. Using proxies—especially rotating ones—lets you spread your requests across many IPs, so you don’t hit these limits quickly.

- Accessing Geographic Restricted Data: Some APIs show different data based on their location. With proxies, you can use IPS from specific countries or regions to get localized results.

- Improving reliability: If one proxy IP gets blocked or fails, your system can switch to another without interrupting the data flow.

The best part? Smart proxy setups work seamlessly with your API calls. You don’t have to manually change IPs or worry about blocks—the proxy handles it automatically, letting you focus on collecting and using the data.

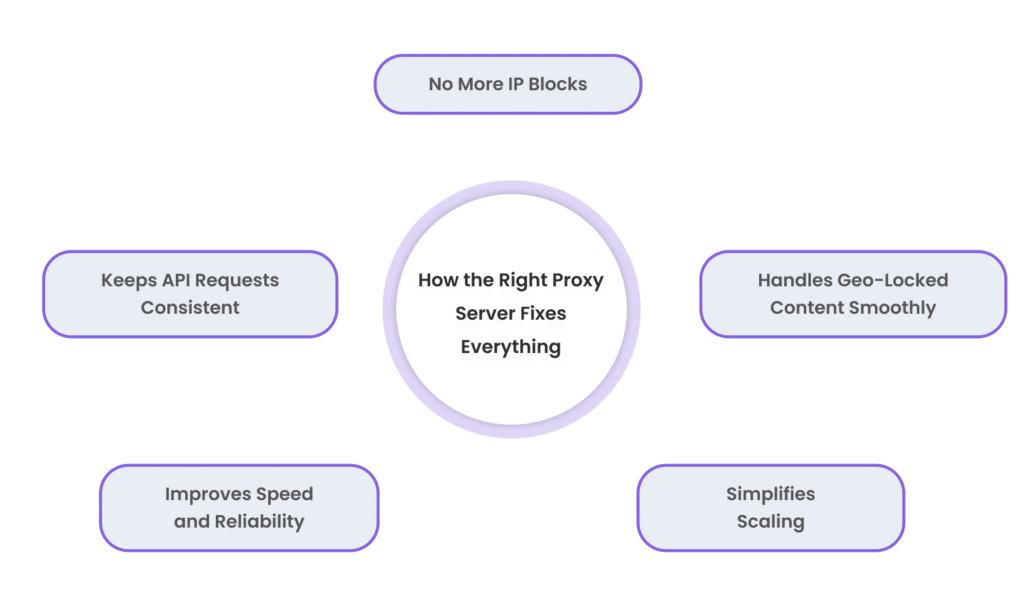

How the Best Proxy Servers Fix Everything

Let’s face it — scraping data or calling APIs sounds simple in theory. But when you actually start doing it at scale, things breakfast. You hit rate limits. You get IP bans. Sometimes you get blocked before your request even lands. That’s not just frustrating — it’s a total waste of time and resources.

Now, here’s the truth most people overlook: the problem usually isn’t your scraper or your API tool — it’s your proxy.

The best proxy server can fix almost every major issue in a scraping or API setup. Here’s how:

1. No More IP Blocks

If you’re using the same IP for every request, websites will catch on quickly. They see unusual traffic from one address and shut you down. A high-quality residential proxy or a rotating proxy spreads your requests across multiple IPs, making it look like real users from different locations — not a bot. That means fewer bans and more successful requests.

2. Handles Geo-Locked Content Smoothly

Want to scrape prices in the U.S., compare with Germany, or track trends in Brazil? Without a proxy that supports location targeting, you’re out of luck. But with a proxy provider that gives you global IPs, you can switch locations easily and get localized data from APIs or web pages. You see what real users in those areas see — and that’s powerful for research, SEO, and competitive tracking.

3. Improves Speed and Reliability

Some proxies are slow or unstable, which makes your scraper lag or your API calls fail. A reliable proxy server offers fast, clean IPs and strong uptime. You’ll get quicker responses, fewer errors, and a smoother workflow — especially important when handling thousands of requests per day.

4. Simplifies Scaling

Once your project grows, your traffic volume grows too. The right proxy lets you scale up without changing your whole setup. With built-in IP rotation, global coverage, and high concurrency, a good proxy provider makes it easy to move from 1,000 to 100,000+ requests daily — without crashing or slowing down.

If your scraping or API process is breaking, slowing down, or giving inconsistent results… It’s time to look at your proxy setup. The right proxy server doesn’t just support your scraping — it saves it. It keeps your data flowing, your system stable, and your results clean.

Smart Proxy Integration with Your API Setup

Setting up a proxy with your API system isn’t just about plugging in an IP and hoping it works. If you want reliable, scalable, and clean results, you need to integrate your proxies the smart way — so everything runs smoothly behind the scenes.

Whether you’re using proxies for scraping search results, pulling product data, or tracking SEO performance, your setup has to be efficient. Here’s what a smart integration really looks like in 2026:

1. Use Proxy Rotation the Right Way

APIs often have request limits per IP. If you’re using a single IP address, it’s only a matter of time before you hit a block or rate limit. That’s where rotating proxies save the day.

A smart integration uses a pool of IPs that change automatically — either on each request or after a fixed time window. Most good proxy providers give you access to this kind of rotation through an endpoint. You don’t have to manage multiple IPs manually — your proxy handles it all for you.

Example: Instead of hardcoding 50 proxy IPs into your script, use a rotating proxy URL that balances traffic across hundreds of IPs without you touching a thing.

2. Choose Location-Based Targeting When Needed

Sometimes, your API needs to look like it’s coming from a specific country or region. Let’s say you’re scraping search results in Korea or tracking product prices in Brazil. Smart proxy integration lets you set your desired location right in the request.

Most premium proxy providers let you choose a country or even a city. When you include that setting in your API request headers or URL parameters, your scraper behaves like a local user — helping you bypass geo-blocks and get region-specific data.

3. Monitor and Handle Errors with Auto-Failover

Sometimes proxies fail. It’s normal — even the best ones can get temporarily blocked or slow down. A smart integration includes error detection and automatic retries. If one proxy IP fails, your system should instantly switch to another without crashing the whole process.

You can also log failed requests to detect patterns — maybe certain IPs are blacklisted, or specific endpoints are stricter. Over time, this helps you fine-tune your proxy strategy and keep your system running smoothly.

4. Don’t Forget Speed and Session Management

For APIs that require session consistency — like logging in, saving a cart, or tracking a session-based flow — you need sticky sessions. That means using the same proxy IP for multiple steps of a process.

Smart proxy tools offer session-based IP control. You can stick to one IP as long as needed, then rotate when done. This avoids random disconnections or login failures during multi-step API actions.

Also, keep an eye on proxy speed and uptime. Some providers throttle bandwidth. A smart integration monitors latency and replaces slow IPs automatically to keep your API requests fast and smooth.

5. Use a Proxy Management Layer If Scaling

If you’re sending thousands (or millions) of requests daily, you’ll need more than a simple proxy plug-in. This is where tools like proxy managers, scraping frameworks, or built-in API logic help.

They route traffic through different proxies, control rotation, retry failed requests, and even log performance. For advanced teams, this is the difference between a random trial-and-error and a fully optimized scraping machine.

Use Case Snapshots: The Best Fit for Your Project

When it comes to web scraping and API work, not every proxy works for every site. Some websites have aggressive bot protection. Others are geo-restricted. And some, like LinkedIn, are just plain risky without the right setup.

That’s why it’s critical to match your proxy type and strategy with your use case. Here’s a breakdown of the most common targets and which proxies work best for each.

1. Scraping SERPs (Google, Bing, etc.)

Challenge: High bot detection, strict request limits, frequent reCAPTCHAs

Best Fit: ✅ Rotating residential proxies or Google SERP-ready APIs with built-in proxy support

Search engines are some of the toughest platforms to scrape, especially Google. If you send too many requests from the same IP, you’ll face captchas, bans, or misleading results. A rotating residential proxy setup mimics natural user behavior and helps you switch IPs automatically, making it harder for Google to catch on.

Bonus tip: Use country-specific proxies to collect geo-targeted search data like local keyword rankings or location-based SERP features.

2. Scraping Google Maps

Challenge: Heavy JavaScript, location-sensitive content, strict bot defences

Best Fit: ✅ Mobile proxies or rotating residential proxies with city-level targeting

Google Maps content, such as business listings or local results, changes based on the user’s IP location. A rotating residential proxy with an accurate location directed to the city level helps imitate local users. This is critical to scraping business data, tracking local SEO results, or collecting map lead data.

Mobile proxies are also powerful here, as they are real smartphone users that Google trusts more.

3. Scraping News Websites

Challenge: Geo-restrictions, paywalls, anti-bot services on major sites like The New York Times or BBC

Best Fit: ✅ Residential proxies or data centre proxies with smart retries

For real-time news aggregation or trend tracking, speed is important, but access is as well. Residential proxies help ignore geographical blocks or firewalls, especially when content differs by region.

If you are scraping a high volume of free-access news sites, Datacenter’s proxies with retry logic can work well and are cost-effective. But for paid or restricted wall content, go residential.

Conclusion

Choosing the best proxy server isn’t just a technical step — it’s the backbone of reliable web scraping and API performance in 2026. From Google SERPs to LinkedIn and beyond, every platform requires a specific proxy setup to stay undetected, deliver clean data, and scale safely.

Whether you’re working with rotating residential proxies, sticky sessions, or location-targeted IPs, matching the right tool to the right task is what separates casual scrapers from serious data professionals. Invest in smart proxy choices now, and your API projects will run faster, smoother, and without the risk of being blocked.

FAQs

The best proxy servers are built to rotate IPs smartly, helping you avoid rate limits and bans. If an API only allows 100 requests per IP per day, a rotating proxy setup splits your requests across many IPs, making it seem like dozens (or hundreds) of real users are accessing the data. This way, top-tier proxies help you keep things steady and avoid blocks, especially at scale.

Not always, but if you’re making more than a handful of requests, especially to sensitive sites like Google, Amazon, or LinkedIn, then yes. Proxies protect your IP from getting banned, help you bypass geo-restrictions, and let you scale up without getting blocked. If you’re doing serious data collection or real-time SEO tracking, proxies are a must-have in your stack.

When comparing Best proxy servers, don’t just look at price. Focus on:

➜ IP type (residential, mobile, datacenter)

➜ Rotation logic (per request, per session)

➜ Geo-targeting options

➜ Success rate on tough sites (like Google SERPs or Amazon)

➜ Support and uptime guarantees

Yes, and that’s one of the biggest benefits. Geo-targeted proxies let you route your requests through IPs in specific countries or cities. So if you need to track search results in Korea, check Amazon listings in Brazil, or pull local business data from Google Maps, your proxy makes it look like you’re browsing from that exact location. It’s the only way to get location-accurate data at scale.